Explore the National Pilot Interview from Portugal to discover the latest updates on the OSTrails pilot studies. Dive into their national activities and learn about their progress promoting the use of machine-actionable DMPs, enhancing the FAIRness of digital objects stored in their repositories, and strengthening interoperability among RDM services. This month, we had the pleasure of speaking with Pedro Príncipe, Filipa Pereira and André Vieira from the University of Minho and Foundation for Science and Technology (FCT).

The University of Minho (UMinho), funded in 1973, has as its mission is to generate, disseminate and apply knowledge, based on freedom of thought and the plurality of critical exercises, promoting higher education and contributing to the construction of a model of society based on humanist principles, with knowledge, creativity and innovation as factors of growth, sustainable development, well-being and solidarity.

UMinho, via the Documentation and Libraries service, is and was actively involved in EU projects such as EOSC-Beyond and EOSC-Future, and is member of the OpenAIRE AMKE, contributing to the development of key EOSC core components.

The Foundation for Science and Technology (FCT) is the national public agency supporting research in science, technology and innovation, covering all areas of knowledge. It is a special regime of public Institute under the supervision and oversight of the Ministry of Education, Science and Innovation. As the national funding entity, FCT is committed to adopting instruments and services that promote the practice of Open Science within the framework of the scientific activity it funds.

FCT is a mandated organisation of the EOSC Association with national representatives on EOSC’s Steering Board, and active participation in EU Open Science projects, including EOSC-Synergy.

Through FCCN, the digital services of FCT, a National Programme on Open Science and Open Research Data is being implemented, which is expected to greatly support the national scientific community on FAIR data management and practices

- What are you most excited about in OSTrails? What are you looking forward to?

UMinho is participating in the OSTrails project with the aim of contributing and benefiting from its outcomes, particularly those related to the implementation of use cases outlined in the technical work packages. Having already developed services to support Research Data Management (RDM) activities for its researchers, UMinho expects OSTrails outcomes to enhance the quality of these services and enable new capabilities through improved interoperability between RDM components. Additionally, UMinho is committed to contributing its expertise and experience to the project and its pilot partners.

The FCT team is very motivated to take part in this project. We are looking forward to contributing to the tracking and assessment of the level of FAIRness of the Digital Objects (DOs), sharing experiences and learning from other partners, ensuring engagement and awareness within the scientific community.

- How is planning, tracking and assessing research being realised in your country/scientific domain?

UMinho, a pioneer in open access to knowledge and Open Science, with OA repository since 2003 and an OA mandate since 2005 , established recently a new Open Science Policy (June 2025), with relevant guidelines and requirements aligned with the leading entities in the field. These guidelines value openness, transparency, reproducibility, credibility, efficiency, and quality in research, with a view to strengthening the scientific system and increasing its recognition by citizens. The policy includes, among others, the following: Alignment with the requirements of major national and international funders, reinforcing the mandatory deposit of publications in the institutional repository (RepositóriUM) and the use of open licences; Clearer guidelines for the management, deposit and opening of research data, aligned with the FAIR data principles; Requirement for researchers to deposit the data necessary to validate the results presented in scientific publications in a trustworthy repository, preferably in UMinho's institutional repository (DataRepositóriUM) or in an appropriate disciplinary repository.

In addition, UMinho offers a Data Management Plan (DMP) service, a local instance of OpenCDMP / Argos, in which researchers can create their DMPs using templates from EU, national funder FCT and a template tailored to PhD students. A CRIS research portal is also under development, which will greatly support the institution's research results monitoring activities.

FCT is actively involved in promoting Open Science. A formal RDM policy aligned with the FAIR data principles and important initiatives is expected to come into force soon. FCT is enhancing infrastructures and services to ensure compliance with the policy and with RDM best practices.

FCT launched in 2022 a service dedicated to RDM: the POLEN service. This service provides a Data Management Plan System, based on ARGOS, including FCT’s template aligned with Science Europe’s guidelines. To cover as much of the research data life cycle as possible, other important services will be made available to the community. These include the POLEN Research Data Repository and a Sync & Share Service for active research data.

- Can you provide some details on your pilot's main actors, services and priorities? How will your pilot adopt the results of OSTrails?

The pilot’s main actors will be the scientific community, comprising researchers and service end-users.

Concerning services and priorities:

The UMinho provides two main services to support the RDM activities, namely a DMP service and a Data Repository. The DMP service, a local instance powered by the openCDMP / Argos software, allows University users to create and share their DMPs using a variety of templates, including those from the national funder FCT, the Horizon Europe funding programme, and a simplified template designed specifically for PhD students to effectively plan their research. The institutional data repository DataRepositóriUM is based on the Dataverse software, and offers to researchers a trustworthy service to deposit their research data.

UMinho aims to apply some of the OSTrails developments, to implement the following project use cases: develop a maDMP template; extend the repository for archiving maDMPs and create links and relations between archived DMPs and publications; and evaluate the FAIRness of digital objects deposited in the data repository.

FCT, through FCCN, manages PTCRIS, which consists of a framework of standards, principles and rules that aim to ensure the integration of information systems supporting scientific activity in a single, coherent and integrated ecosystem. This regulatory framework is based on international reference standards and codes of good practice, namely persistent identifiers, data exchange standards, information security and privacy standards, among others.

As already mentioned, POLEN service supports the researchers in the management and sharing of their research data, namely through the Data Management Plan System and the POLEN Repository.

A strong collaboration with the research communities has been continuous since the beginning of FAIR activities in Portugal. A dedicated series of workshops, such as the Data Research Management Forums (co-organised with the University of Minho), bring together researchers, data managers and decision makers to promote an integrated approach to data management in Portugal

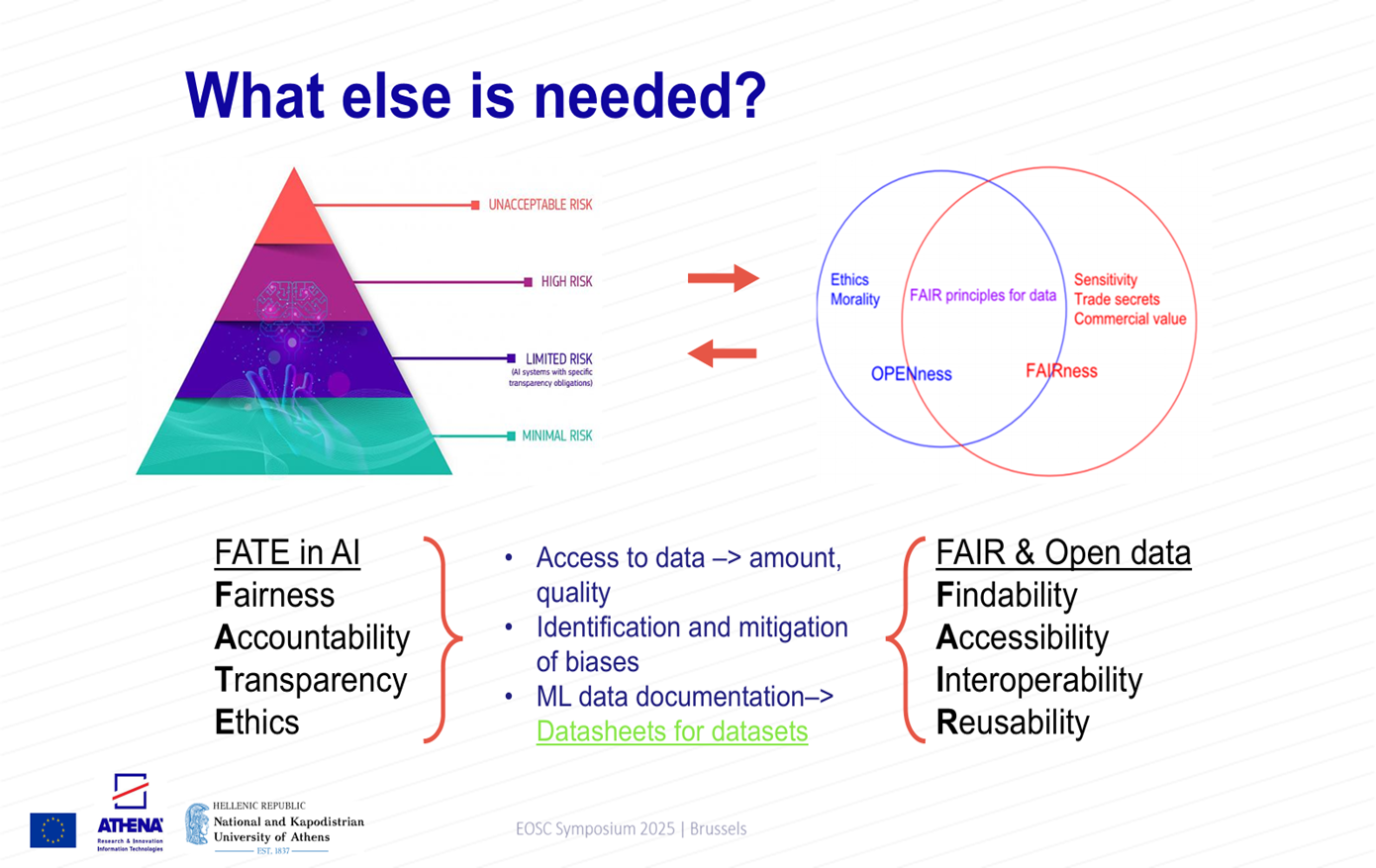

Both organisations aim to strengthen FAIR principles by offering RDM services contributing to FAIR data practices. This includes the development of machine-actionable DMP templates, the assessment of the FAIRness of digital objects deposited in both data repositories and ensuring that these principles are embedded within their policies.

- Ongoing activities and Next Steps?

University of Minho and FCT are currently aligning and planning the project’s activities.

Within the project, we will design two machine-actionable DMP templates, for each entity. We will then identify researchers to support us in the assessment of the DMP templates. It is also expected to co-define metrics and criteria for evaluating DMPs.

Additionally, we will be dedicated to the evaluation of the FAIRness of digital objects in the research data repositories of both partners: DataRepositóriUM and POLEN Repository.

We believe that this project, particularly this pilot, will reinforce and support the work that has been actively carried out by the University of Minho and the FCT regarding the adoption of best practices in Open Science and the adoption of the FAIR principles.